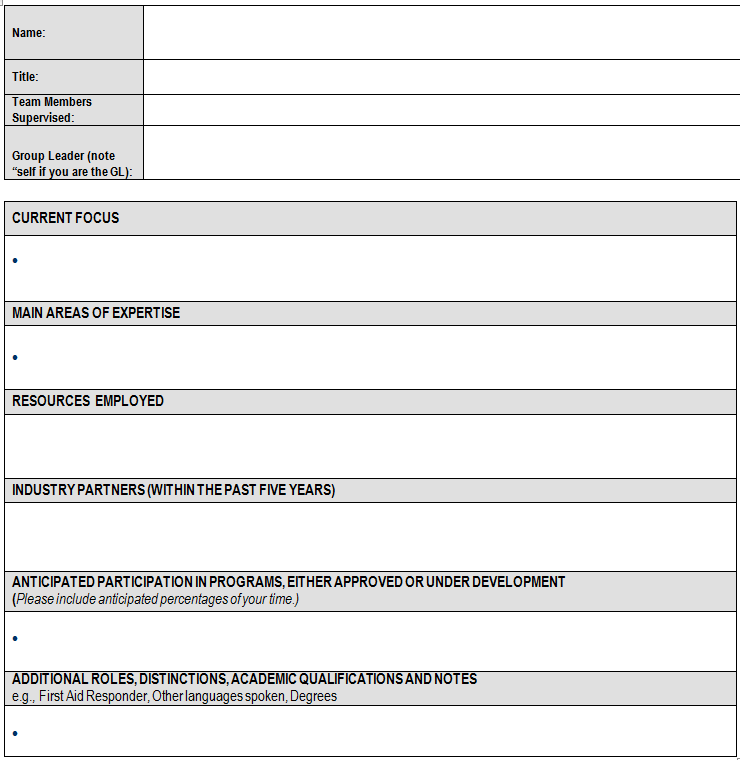

我想根据以下表单对一组文件进行文本挖掘。我可以创建一个语料库,其中每个文件都是一个文档(使用 tm),但我认为创建一个语料库可能更好,其中第二个表单中的每个部分都是具有以下内容的文档元数据:

Author : John Smith

DateTimeStamp: 2013-04-18 16:53:31

Description :

Heading : Current Focus

ID : Smith-John_e.doc Current Focus

Language : en_CA

Origin : Smith-John_e.doc

Name : John Smith

Title : Manager

TeamMembers : Joe Blow, John Doe

GroupLeader : She who must be obeyed

其中名称、职务、团队成员和组领导者是从表单上的第一个表中提取的。通过这种方式,要分析的每个文本 block 都将保留其一些上下文。

解决这个问题的最佳方法是什么?我能想到两种方法:

- 以某种方式将我拥有的语料库解析为子语料库。

- 以某种方式将文档解析为子文档并从中创建语料库。

任何指示将不胜感激。

这是这样的形式:

Here is an RData file包含 2 个文档的语料库。 exc[[1]] 来自 .doc,exc[[2]] 来自 docx。他们都使用了上面的表格。

最佳答案

这是一个方法的快速草图,希望它可能会引起更有才华的人停下来并提出更有效和更强大的建议...在您的问题中使用RData文件,我发现doc 和 docx 文件的结构略有不同,因此需要略有不同的方法(尽管我在元数据中看到您的 docx 是 'fake2.txt ',那么它真的是docx吗?我在你的另一个问题中看到你使用了R之外的转换器,这一定就是它是txt的原因。)

library(tm)

首先获取 doc 文件的自定义元数据。正如您所看到的,我不是正则表达式专家,但它大致是“摆脱尾随和前导空格”,然后“摆脱“单词””,然后摆脱标点符号......

# create User-defined local meta data pairs

meta(exc[[1]], type = "corpus", tag = "Name1") <- gsub("^\\s+|\\s+$","", gsub("Name", "", gsub("[[:punct:]]", '', exc[[1]][3])))

meta(exc[[1]], type = "corpus", tag = "Title") <- gsub("^\\s+|\\s+$","", gsub("Title", "", gsub("[[:punct:]]", '', exc[[1]][4])))

meta(exc[[1]], type = "corpus", tag = "TeamMembers") <- gsub("^\\s+|\\s+$","", gsub("Team Members", "", gsub("[[:punct:]]", '', exc[[1]][5])))

meta(exc[[1]], type = "corpus", tag = "ManagerName") <- gsub("^\\s+|\\s+$","", gsub("Name of your", "", gsub("[[:punct:]]", '', exc[[1]][7])))

现在看看结果

# inspect

meta(exc[[1]], type = "corpus")

Available meta data pairs are:

Author :

DateTimeStamp: 2013-04-22 13:59:28

Description :

Heading :

ID : fake1.doc

Language : en_CA

Origin :

User-defined local meta data pairs are:

$Name1

[1] "John Doe"

$Title

[1] "Manager"

$TeamMembers

[1] "Elise Patton Jeffrey Barnabas"

$ManagerName

[1] "Selma Furtgenstein"

对 docx 文件执行相同的操作

# create User-defined local meta data pairs

meta(exc[[2]], type = "corpus", tag = "Name2") <- gsub("^\\s+|\\s+$","", gsub("Name", "", gsub("[[:punct:]]", '', exc[[2]][2])))

meta(exc[[2]], type = "corpus", tag = "Title") <- gsub("^\\s+|\\s+$","", gsub("Title", "", gsub("[[:punct:]]", '', exc[[2]][4])))

meta(exc[[2]], type = "corpus", tag = "TeamMembers") <- gsub("^\\s+|\\s+$","", gsub("Team Members", "", gsub("[[:punct:]]", '', exc[[2]][6])))

meta(exc[[2]], type = "corpus", tag = "ManagerName") <- gsub("^\\s+|\\s+$","", gsub("Name of your", "", gsub("[[:punct:]]", '', exc[[2]][8])))

看看

# inspect

meta(exc[[2]], type = "corpus")

Available meta data pairs are:

Author :

DateTimeStamp: 2013-04-22 14:06:10

Description :

Heading :

ID : fake2.txt

Language : en

Origin :

User-defined local meta data pairs are:

$Name2

[1] "Joe Blow"

$Title

[1] "Shift Lead"

$TeamMembers

[1] "Melanie Baumgartner Toby Morrison"

$ManagerName

[1] "Selma Furtgenstein"

如果您有大量文档,那么包含这些 meta 函数的 lapply 函数将是最佳选择。

现在我们已经获得了自定义元数据,我们可以对文档进行子集化以排除该部分文本:

# create new corpus that excludes part of doc that is now in metadata. We just use square bracket indexing to subset the lines that are the second table of the forms (slightly different for each doc type)

excBody <- Corpus(VectorSource(c(paste(exc[[1]][13:length(exc[[1]])], collapse = ","),

paste(exc[[2]][9:length(exc[[2]])], collapse = ","))))

# get rid of all the white spaces

excBody <- tm_map(excBody, stripWhitespace)

看看:

inspect(excBody)

A corpus with 2 text documents

The metadata consists of 2 tag-value pairs and a data frame

Available tags are:

create_date creator

Available variables in the data frame are:

MetaID

[[1]]

|CURRENT RESEARCH FOCUS |,| |,|Lorem ipsum dolor sit amet, consectetur adipiscing elit. |,|Donec at ipsum est, vel ullamcorper enim. |,|In vel dui massa, eget egestas libero. |,|Phasellus facilisis cursus nisi, gravida convallis velit ornare a. |,|MAIN AREAS OF EXPERTISE |,|Vestibulum aliquet faucibus tortor, sed aliquet purus elementum vel. |,|In sit amet ante non turpis elementum porttitor. |,|TECHNOLOGY PLATFORMS, INSTRUMENTATION EMPLOYED |,| Vestibulum sed turpis id nulla eleifend fermentum. |,|Nunc sit amet elit eu neque tincidunt aliquet eu at risus. |,|Cras tempor ipsum justo, ut blandit lacus. |,|INDUSTRY PARTNERS (WITHIN THE PAST FIVE YEARS) |,| Pellentesque facilisis nisl in libero scelerisque mattis eu quis odio. |,|Etiam a justo vel sapien rhoncus interdum. |,|ANTICIPATED PARTICIPATION IN PROGRAMS, EITHER APPROVED OR UNDER DEVELOPMENT |,|(Please include anticipated percentages of your time.) |,| Proin vitae ligula quis enim vulputate sagittis vitae ut ante. |,|ADDITIONAL ROLES, DISTINCTIONS, ACADEMIC QUALIFICATIONS AND NOTES |,|e.g., First Aid Responder, Other languages spoken, Degrees, Charitable Campaign |,|Canvasser (GCWCC), OSH representative, Social Committee |,|Sed nec tellus nec massa accumsan faucibus non imperdiet nibh. |,,

[[2]]

CURRENT RESEARCH FOCUS,,* Lorem ipsum dolor sit amet, consectetur adipiscing elit.,* Donec at ipsum est, vel ullamcorper enim.,* In vel dui massa, eget egestas libero.,* Phasellus facilisis cursus nisi, gravida convallis velit ornare a.,MAIN AREAS OF EXPERTISE,* Vestibulum aliquet faucibus tortor, sed aliquet purus elementum vel.,* In sit amet ante non turpis elementum porttitor. ,TECHNOLOGY PLATFORMS, INSTRUMENTATION EMPLOYED,* Vestibulum sed turpis id nulla eleifend fermentum.,* Nunc sit amet elit eu neque tincidunt aliquet eu at risus.,* Cras tempor ipsum justo, ut blandit lacus.,INDUSTRY PARTNERS (WITHIN THE PAST FIVE YEARS),* Pellentesque facilisis nisl in libero scelerisque mattis eu quis odio.,* Etiam a justo vel sapien rhoncus interdum.,ANTICIPATED PARTICIPATION IN PROGRAMS, EITHER APPROVED OR UNDER DEVELOPMENT ,(Please include anticipated percentages of your time.),* Proin vitae ligula quis enim vulputate sagittis vitae ut ante.,ADDITIONAL ROLES, DISTINCTIONS, ACADEMIC QUALIFICATIONS AND NOTES,e.g., First Aid Responder, Other languages spoken, Degrees, Charitable Campaign Canvasser (GCWCC), OSH representative, Social Committee,* Sed nec tellus nec massa accumsan faucibus non imperdiet nibh.,,

现在,文档已准备好进行文本挖掘,上表中的数据已从文档中移出并移入文档元数据中。

当然,这一切都取决于文档的高度规范性。如果每个文档的第一个表中有不同的行数,那么简单的索引方法可能会失败(尝试一下,看看会发生什么),并且需要更强大的方法。

更新:更强大的方法

更仔细地阅读问题后,got a bit more education about regex ,这是一种更强大的方法,并且不依赖于对文档的特定行进行索引。相反,我们使用正则表达式从两个单词之间提取文本以制作元数据并拆分文档

这是我们如何制作用户定义的本地元数据(一种替代上面方法的方法)

library(gdata) # for the trim function

txt <- paste0(as.character(exc[[1]]), collapse = ",")

# inspect the document to identify the words on either side of the string

# we want, so 'Name' and 'Title' are on either side of 'John Doe'

extract <- regmatches(txt, gregexpr("(?<=Name).*?(?=Title)", txt, perl=TRUE))

meta(exc[[1]], type = "corpus", tag = "Name1") <- trim(gsub("[[:punct:]]", "", extract))

extract <- regmatches(txt, gregexpr("(?<=Title).*?(?=Team)", txt, perl=TRUE))

meta(exc[[1]], type = "corpus", tag = "Title") <- trim(gsub("[[:punct:]]","", extract))

extract <- regmatches(txt, gregexpr("(?<=Members).*?(?=Supervised)", txt, perl=TRUE))

meta(exc[[1]], type = "corpus", tag = "TeamMembers") <- trim(gsub("[[:punct:]]","", extract))

extract <- regmatches(txt, gregexpr("(?<=your).*?(?=Supervisor)", txt, perl=TRUE))

meta(exc[[1]], type = "corpus", tag = "ManagerName") <- trim(gsub("[[:punct:]]","", extract))

# inspect

meta(exc[[1]], type = "corpus")

Available meta data pairs are:

Author :

DateTimeStamp: 2013-04-22 13:59:28

Description :

Heading :

ID : fake1.doc

Language : en_CA

Origin :

User-defined local meta data pairs are:

$Name1

[1] "John Doe"

$Title

[1] "Manager"

$TeamMembers

[1] "Elise Patton Jeffrey Barnabas"

$ManagerName

[1] "Selma Furtgenstein"

同样,我们可以将第二个表的部分提取到单独的 向量,然后你可以将它们制作成文档和语料库或者只是工作 将它们作为向量。

txt <- paste0(as.character(exc[[1]]), collapse = ",")

CURRENT_RESEARCH_FOCUS <- trim(gsub("[[:punct:]]","", regmatches(txt, gregexpr("(?<=CURRENT RESEARCH FOCUS).*?(?=MAIN AREAS OF EXPERTISE)", txt, perl=TRUE))))

[1] "Lorem ipsum dolor sit amet consectetur adipiscing elit Donec at ipsum est vel ullamcorper enim In vel dui massa eget egestas libero Phasellus facilisis cursus nisi gravida convallis velit ornare a"

MAIN_AREAS_OF_EXPERTISE <- trim(gsub("[[:punct:]]","", regmatches(txt, gregexpr("(?<=MAIN AREAS OF EXPERTISE).*?(?=TECHNOLOGY PLATFORMS, INSTRUMENTATION EMPLOYED)", txt, perl=TRUE))))

[1] "Vestibulum aliquet faucibus tortor sed aliquet purus elementum vel In sit amet ante non turpis elementum porttitor"

等等。我希望这更接近你所追求的。如果没有,最好将您的任务分解为一组更小、更集中的问题,然后分别提出它们(或者等待其中一位专家停下来回答这个问题!)。

关于r - 从Word文档中提取半结构化文本,我们在Stack Overflow上找到一个类似的问题: https://stackoverflow.com/questions/16105973/