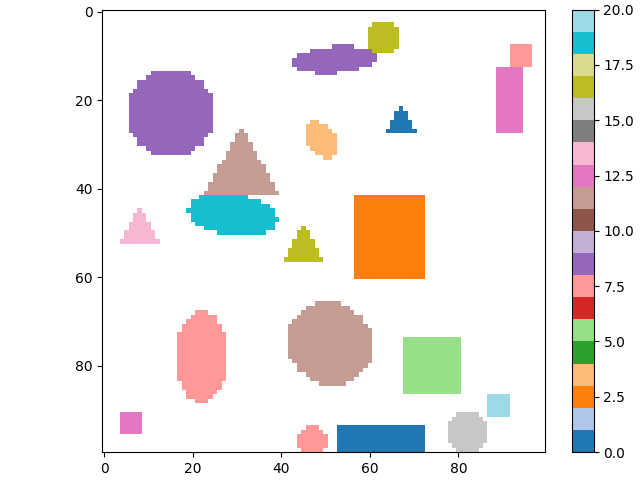

假设您有一个分割图,其中每个对象都由一个唯一索引标识,例如看起来像这样:

对于每个对象,我想保存它覆盖了哪些像素,但到目前为止我只能想出标准的 for 循环。不幸的是,对于具有数千个单独对象的较大图像,结果证明这非常慢——至少对于我的真实数据而言。我能以某种方式加快速度吗?

import numpy as np

import matplotlib as mpl

import matplotlib.pyplot as plt

from skimage.draw import random_shapes

# please ignore that this does not always produce 20 objects each with a

# unique color. it is simply a quick way to produce data that is similar to

# my problem that can also be visualized.

segmap, labels = random_shapes(

(100, 100), 20, min_size=6, max_size=20, multichannel=False,

intensity_range=(0, 20), num_trials=100,

)

segmap = np.ma.masked_where(segmap == 255, segmap)

object_idxs = np.unique(segmap)[:-1]

objects = np.empty(object_idxs.size, dtype=[('idx', 'i4'), ('pixels', 'O')])

# important bit here:

# this I can vectorize

objects['idx'] = object_idxs

# but this I cannot. and it takes forever.

for i in range(object_idxs.size):

objects[i]['pixels'] = np.where(segmap == i)

# just plotting here

fig, ax = plt.subplots(constrained_layout=True)

image = ax.imshow(

segmap, cmap='tab20', norm=mpl.colors.Normalize(vmin=0, vmax=20)

)

fig.colorbar(image)

fig.show()

最佳答案

在循环中使用 np.where 在算法上效率不高,因为时间复杂度 是 O(s n m) 其中 s = object_idxs.size 和 n, m = segmap.shape。此操作可以在 O(n m) 中完成。

使用 Numpy 的一种解决方案是首先选择所有对象像素位置,然后根据它们在 segmap 中的关联对象对它们进行排序,最后根据对象的数量拆分它们。这是代码:

background = np.max(segmap)

mask = segmap != background

objects = segmap[mask]

uniqueObjects, counts = np.unique(objects, return_counts=True)

ordering = np.argsort(objects)

i, j = np.where(mask)

indices = np.vstack([i[ordering], j[ordering]])

indicesPerObject = np.split(indices, counts.cumsum()[:-1], axis=1)

objects = np.empty(uniqueObjects.size, dtype=[('idx', 'i4'), ('pixels', 'O')])

objects['idx'] = uniqueObjects

for i in range(uniqueObjects.size):

# Use `tuple(...)` to get the exact same type as the initial code here

objects[i]['pixels'] = tuple(indicesPerObject[i])

# In case the conversion to tuple is not required, the loop can also be accelerated:

# objects['pixels'] = indicesPerObject

关于python - 有没有办法加快 numpy.where 的循环?,我们在Stack Overflow上找到一个类似的问题: https://stackoverflow.com/questions/71240258/