我的目标是在可汗学院上尽可能多地抓取个人资料链接。然后在每个配置文件上抓取一些特定数据,将它们写入 CSV 文件。

我的问题很简单:脚本太慢了。

这是脚本:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.common.exceptions import TimeoutException,StaleElementReferenceException,NoSuchElementException

from bs4 import BeautifulSoup

import re

from requests_html import HTMLSession

session = HTMLSession()

r = session.get('https://www.khanacademy.org/computing/computer-programming/programming#intro-to-programming')

r.html.render(sleep=5)

soup=BeautifulSoup(r.html.html,'html.parser')

# first step: find all courses links and put them in a list

courses_links = soup.find_all(class_='link_1uvuyao-o_O-nodeStyle_cu2reh-o_O-nodeStyleIcon_4udnki')

list_courses={}

for links in courses_links:

courses = links.extract()

link_course = courses['href']

title_course= links.find(class_='nodeTitle_145jbuf')

span_title_course=title_course.span

text_span=span_title_course.text.strip()

final_link_course ='https://www.khanacademy.org'+link_course

list_courses[text_span]=final_link_course

# second step: loop the script down below with each course link in our list

for courses_step in list_courses.values():

# part 1: make selenium infinite click "schow more" button so we can then scrape as much profile links as possible

driver = webdriver.Chrome()

driver.get(courses_step)

while True: # might want to change that to do some testing

try:

showmore=WebDriverWait(driver, 15).until(EC.presence_of_element_located((By.CLASS_NAME,'button_1eqj1ga-o_O-shared_1t8r4tr-o_O-default_9fm203')))

showmore.click()

except TimeoutException:

break

except StaleElementReferenceException:

break

# part2: once the page fully loaded scrape all profile links and put them in a list

soup=BeautifulSoup(driver.page_source,'html.parser')

#find the profile links

driver.quit()

profiles = soup.find_all(href=re.compile("/profile/kaid"))

profile_list=[]

for links in profiles:

links_no_list = links.extract()

text_link = links_no_list['href']

text_link_nodiscussion = text_link[:-10]

final_profile_link ='https://www.khanacademy.org'+text_link_nodiscussion

profile_list.append(final_profile_link)

#remove profile link duplicates

profile_list=list(set(profile_list))

#print number of profiles we got in the course link

print('in this link:')

print(courses_step)

print('we have this number of profiles:')

print(len(profile_list))

#create the csv file

filename = "khan_withprojectandvotes.csv"

f = open(filename, "w")

headers = "link, date_joined, points, videos, questions, votes, answers, flags, project_request, project_replies, comments, tips_thx, last_date, number_project, projet_votes, projets_spins, topq_votes, topa_votes, sum_badges, badge_lvl1, badge_lvl2, badge_lvl3, badge_lvl4, badge_lvl5, badge_challenge\n"

f.write(headers)

#part 3: for each profile link, scrape the specific data and store them into the csv

for link in profile_list:

#print each profile link we are about to scrape

print("Scraping ",link)

session = HTMLSession()

r = session.get(link)

r.html.render(sleep=5)

soup=BeautifulSoup(r.html.html,'html.parser')

badge_list=soup.find_all(class_='badge-category-count')

badgelist=[]

if len(badge_list) != 0:

for number in badge_list:

text_num=number.text.strip()

badgelist.append(text_num)

number_badges=sum(list(map(int, badgelist)))

number_badges=str(number_badges)

badge_challenge=str(badgelist[0])

badge_lvl5=str(badgelist[1])

badge_lvl4=str(badgelist[2])

badge_lvl3=str(badgelist[3])

badge_lvl2=str(badgelist[4])

badge_lvl1=str(badgelist[5])

else:

number_badges='NA'

badge_challenge='NA'

badge_lvl5='NA'

badge_lvl4='NA'

badge_lvl3='NA'

badge_lvl2='NA'

badge_lvl1='NA'

user_info_table=soup.find('table', class_='user-statistics-table')

if user_info_table is not None:

dates,points,videos=[tr.find_all('td')[1].text for tr in user_info_table.find_all('tr')]

else:

dates=points=videos='NA'

user_socio_table=soup.find_all('div', class_='discussion-stat')

data = {}

for gettext in user_socio_table:

category = gettext.find('span')

category_text = category.text.strip()

number = category.previousSibling.strip()

data[category_text] = number

full_data_keys=['questions','votes','answers','flags raised','project help requests','project help replies','comments','tips and thanks'] #might change answers to answer because when it's 1 it's putting NA instead

for header_value in full_data_keys:

if header_value not in data.keys():

data[header_value]='NA'

user_calendar = soup.find('div',class_='streak-calendar-scroll-container')

if user_calendar is not None:

last_activity = user_calendar.find('span',class_='streak-cell filled')

try:

last_activity_date = last_activity['title']

except TypeError:

last_activity_date='NA'

else:

last_activity_date='NA'

session = HTMLSession()

linkq=link+'discussion/questions'

r = session.get(linkq)

r.html.render(sleep=5)

soup=BeautifulSoup(r.html.html,'html.parser')

topq_votes=soup.find(class_='text_12zg6rl-o_O-LabelXSmall_mbug0d-o_O-votesSum_19las6u')

if topq_votes is not None:

topq_votes=topq_votes.text.strip()

topq_votes=re.findall('\d+', topq_votes)

topq_votes=topq_votes[0]

#print(topq_votes)

else:

topq_votes='0'

session = HTMLSession()

linka=link+'discussion/answers'

r = session.get(linka)

r.html.render(sleep=5)

soup=BeautifulSoup(r.html.html,'html.parser')

topa_votes=soup.find(class_='text_12zg6rl-o_O-LabelXSmall_mbug0d-o_O-votesSum_19las6u')

if topa_votes is not None:

topa_votes=topa_votes.text.strip()

topa_votes=re.findall('\d+', topa_votes)

topa_votes=topa_votes[0]

else:

topa_votes='0'

# infinite click on show more button for each profile link project section and then scrape data

with webdriver.Chrome() as driver:

wait = WebDriverWait(driver,10)

driver.get(link+'projects')

while True:

try:

showmore = wait.until(EC.presence_of_element_located((By.CSS_SELECTOR,'[class^="showMore"] > a')))

driver.execute_script("arguments[0].click();",showmore)

except Exception:

break

soup = BeautifulSoup(driver.page_source,'html.parser')

driver.quit()

project = soup.find_all(class_='title_1usue9n')

prjct_number = str(len(project))

votes_spins=soup.find_all(class_='stats_35behe')

list_votes=[]

for votes in votes_spins:

numbvotes=votes.text.strip()

numbvotes=re.split(r'\s',numbvotes)

list_votes.append(numbvotes[0])

prjct_votes=str(sum(list(map(int, list_votes))))

list_spins=[]

for spins in votes_spins:

numspins=spins.text.strip()

numspins=re.split(r'\s',numspins)

list_spins.append(numspins[3])

number_spins=list(map(int, list_spins))

number_spins = [0 if i < 0 else i for i in number_spins]

prjct_spins=str(sum(number_spins))

f.write(link + "," + dates + "," + points.replace("," , "") + "," + videos + "," + data['questions'] + "," + data['votes'] + "," + data['answers'] + "," + data['flags raised'] + "," + data['project help requests'] + "," + data['project help replies'] + "," + data['comments'] + "," + data['tips and thanks'] + "," + last_activity_date + "," + prjct_number + "," + prjct_votes + "," + prjct_spins + "," + topq_votes + "," + topa_votes + "," + number_badges + "," + badge_lvl1 + ',' + badge_lvl2 + ',' + badge_lvl3 + ',' + badge_lvl4 + ',' + badge_lvl5 + ',' + badge_challenge + ',' + "\n")

你有什么建议吗? (一些代码可以帮助我理解)

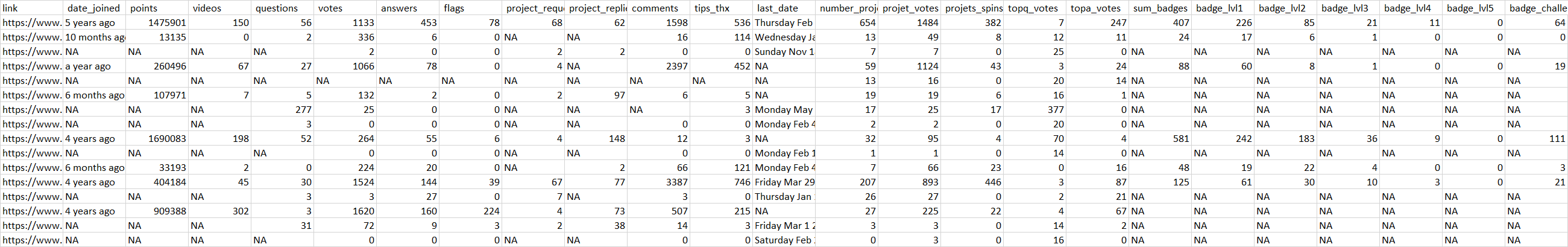

所需的输出应如下所示(但行数更多):

最佳答案

该脚本花费大量时间的一个原因是它发送的请求数量众多,它消耗更少时间的一种方法是限制请求数量。这可以通过使用他们的内部 API 来获取配置文件数据来实现。例如:发送一个get请求到这个链接https://www.khanacademy.org/api/internal/user/discussion/summary?username=user_name&lang=en (并将 user_name 更改为实际用户名)将返回您需要(以及更多)的配置文件数据作为 JSON,而不必抓取许多来源。然后,您可以从 JSON 输出中提取数据,并将它们转换为 CSV。您将需要使用 selenium 仅获取讨论数据并查找用户名列表。这将大大缩短脚本运行时间。

旁注:即使是模块链接也可以使用抓取主 URL 时解析的 JS 变量来提取。该变量包含存储类(class)数据(包括链接)的 JSON。

这是执行此操作的代码:

import requests

import bs4

import json

URL = "https://www.khanacademy.org/computing/computer-programming/programming#intro-to-programming"

BASE_URL = "https://www.khanacademy.org"

response = requests.get(URL)

soup = bs4.BeautifulSoup(response.content, 'lxml')

script = soup.find_all('script')[18]

script = script.text.encode('utf-8')

script = unicode(script, errors='ignore').encode('utf-8').strip()

script = script.split('{window["./javascript/app-shell-package/app-entry.js"] = ')[1]

script = script[:-2]

json_content = json.loads(script)

您可以从该 JSON 中提取模块链接,并改为查询它们。

关于python-3.x - 有没有办法让 Selenium 异步工作?,我们在Stack Overflow上找到一个类似的问题: https://stackoverflow.com/questions/55433083/